Tutorial - Ultralytics YOLOv8

Let's run Ultralytics YOLOv8 on Jetson with NVIDIA TensorRT .

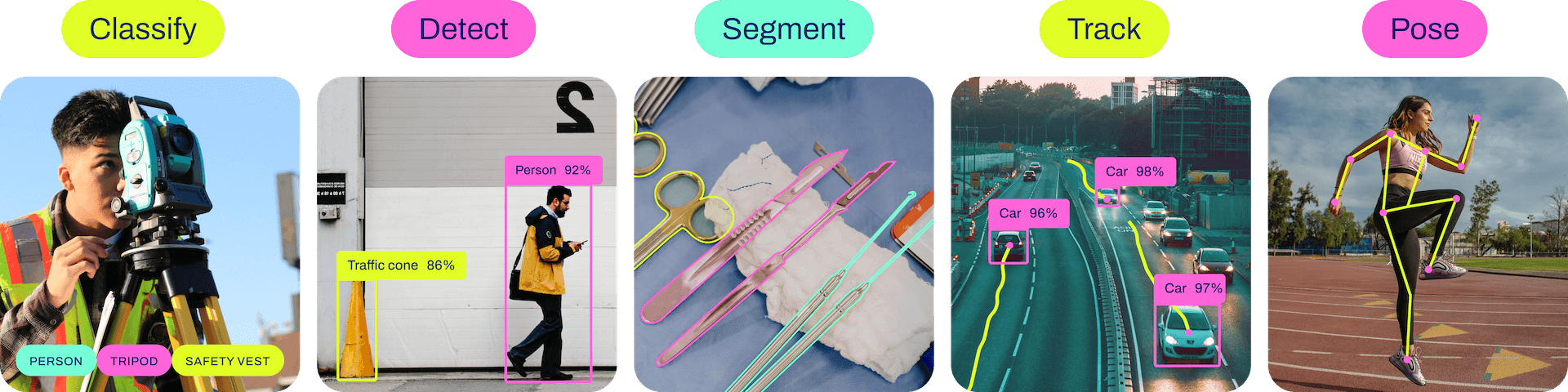

Ultralytics YOLOv8 is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection and tracking, instance segmentation, image classification and pose estimation tasks.

What you need

-

One of the following Jetson devices:

Jetson AGX Orin (64GB) Jetson AGX Orin (32GB) Jetson Orin NX (16GB) Jetson Orin Nano (8GB) Jetson Nano (4GB)

-

Running one of the following versions of JetPack :

JetPack 4 (L4T r32.x) JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

How to start

Execute the below commands according to the JetPack version to pull the corresponding Docker container and run on Jetson.

t=ultralytics/ultralytics:latest-jetson-jetpack4

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

t=ultralytics/ultralytics:latest-jetson-jetpack5

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

t=ultralytics/ultralytics:latest-jetson-jetpack6

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

Convert model to TensorRT and run inference

The YOLOv8n model in PyTorch format is converted to TensorRT to run inference with the exported model.

Example

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Export the model

model.export(format="engine") # creates 'yolov8n.engine'

# Load the exported TensorRT model

trt_model = YOLO("yolov8n.engine")

# Run inference

results = trt_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLOv8n PyTorch model to TensorRT format

yolo export model=yolov8n.pt format=engine # creates 'yolov8n.engine'

# Run inference with the exported model

yolo predict model=yolov8n.engine source='https://ultralytics.com/images/bus.jpg'

| Manufacturing | Sports | Wildlife |

|---|---|---|

|

|

|

| Vehicle Spare Parts Detection | Football Player Detection | Tiger pose Detection |

Note

Visit the

Export page

to access additional arguments when exporting models to different model formats. Note that the default arguments require inference using fixed image dimensions when

dynamic=False

. To change the input source for inference, please refer to

Model Prediction

page.

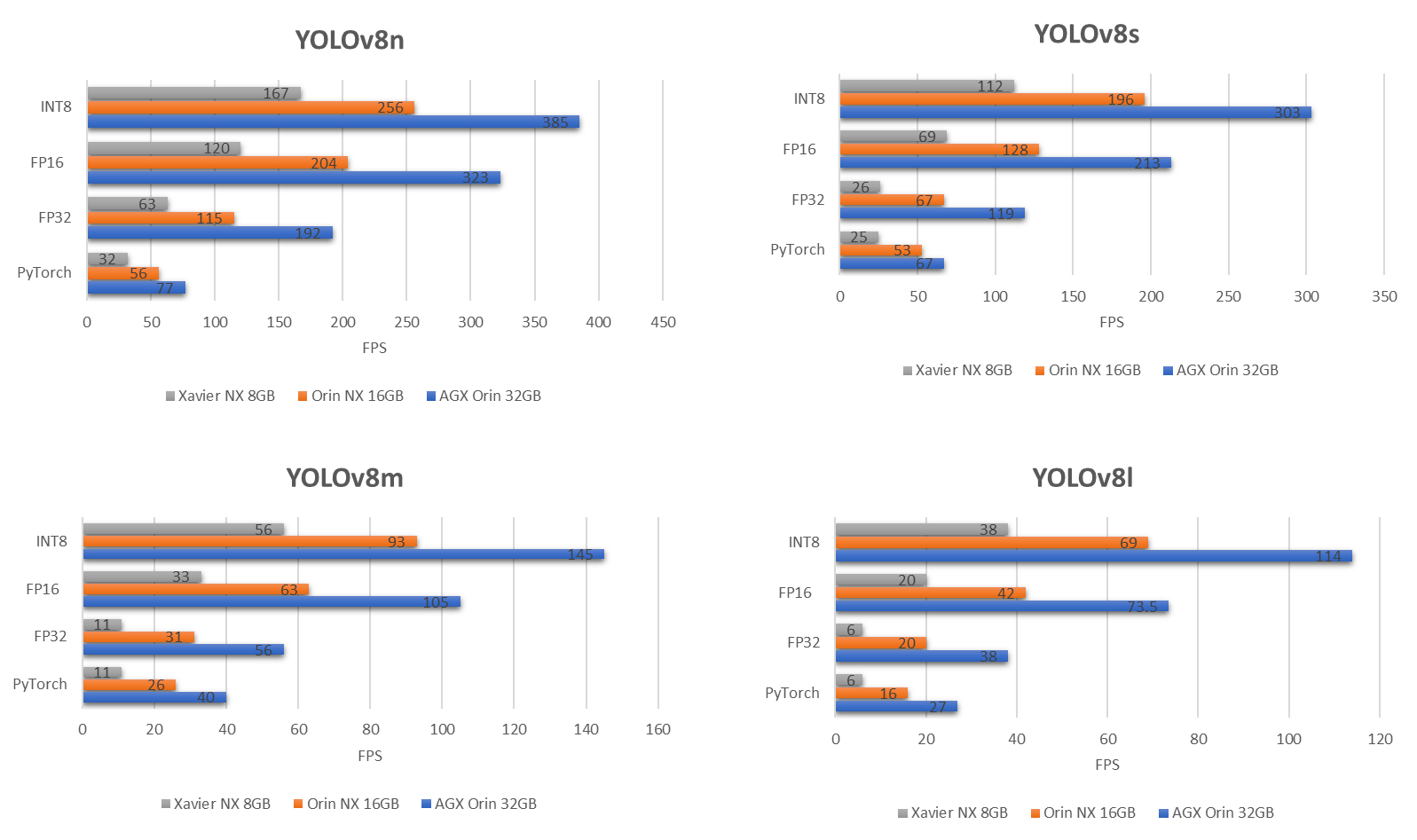

Benchmarks

Benchmarks of the YOLOv8 variants with TensorRT were run by Seeed Studio on their reComputer systems:

| Model | PyTorch | FP32 | FP16 | INT8 |

|---|---|---|---|---|

| YOLOv8n | 32 | 63 | 120 | 167 |

| YOLOv8s | 25 | 26 | 69 | 112 |

| YOLOv8m | 11 | 11 | 33 | 56 |

| YOLOv8l | 6 | 6 | 20 | 38 |

| Model | PyTorch | FP32 | FP16 | INT8 |

|---|---|---|---|---|

| YOLOv8n | 56 | 115 | 204 | 256 |

| YOLOv8s | 53 | 67 | 128 | 196 |

| YOLOv8m | 26 | 31 | 63 | 93 |

| YOLOv8l | 16 | 20 | 42 | 69 |

| Model | PyTorch | FP32 | FP16 | INT8 |

|---|---|---|---|---|

| YOLOv8n | 77 | 192 | 323 | 385 |

| YOLOv8s | 67 | 119 | 213 | 303 |

| YOLOv8m | 40 | 56 | 105 | 145 |

| YOLOv8l | 27 | 38 | 73.5 | 114 |

- FP32/FP16/INT8 with TensorRT (frames per second)

- Original post with the benchmarks are found here

Further reading

To learn more, visit our comprehensive guide on running Ultralytics YOLOv8 on NVIDIA Jetson including benchmarks!

Note

Ultralytics YOLOv8 models are offered under

AGPL-3.0 License

which is an

OSI-approved

open-source license and is ideal for students and enthusiasts, promoting open collaboration and knowledge sharing. See the

LICENSE

file for more details.

One-Click Run Ultralytics YOLO on Jetson Orin - by Seeed Studio jetson-examples

Quickstart ⚡

-

Install the package:

pip install jetson-examples -

Restart your reComputer:

sudo reboot -

Run Ultralytics YOLO on Jetson with one command:

reComputer run ultralytics-yolo -

Enter

http://127.0.0.1:5001orhttp://device_ip:5001in your browser to access the Web UI.

For more details, please read: Jetson-Example: Run Ultralytics YOLO Platform Service on NVIDIA Jetson Orin .